Does Your Lighthouse Score Really Affect Your SEO? (The 2026 Reality Check)

Summarize with

In 2026, marketers are being forced into a false choice: build a high-converting, data-driven website or chase a "perfect" score in Google Lighthouse. The reality? You can’t do both.

Lighthouse is a diagnostic tool judging 2026 innovation through the lens of 2016 hardware. This has created a culture of Performance Theater, where technical tricks to fool a bot are prioritized over actual user experience.

It is time to look past the "Red" score. This article explains why the Lighthouse paradox exists, why your SEO depends on Field Data, not synthetic audits, and how to stop chasing vanity metrics that don't drive revenue.

Key takeaways

- Lighthouse judges your 2026 site using a 2016 phone and "Slow 4G." It’s a stress test, not reality.

- Essential tools (A/B testing, Analytics) kill your score but drive your revenue. Don't sacrifice growth for a bot.

- Google ranks you on real user data (Search Console), not synthetic lab scores. If real users are happy, the score is irrelevant.

- "Gaming" the score with tricks often breaks your data and ruins actual UX.

- AI crawlers care about clean HTML and responsiveness, not your 100/100 performance circle.

Lighthouse Score: The "Vanity Metric" Trap

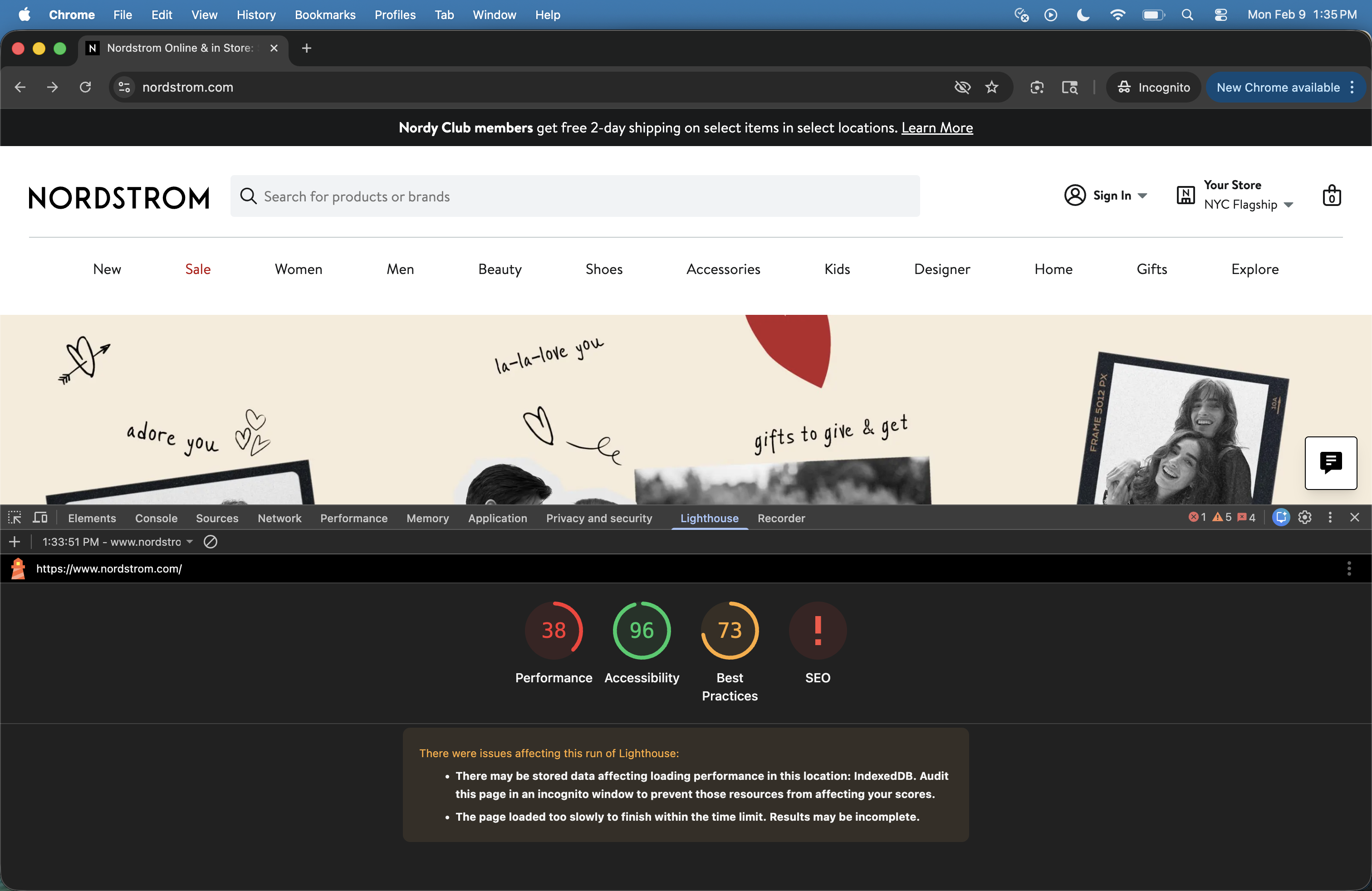

If you open the Nordstrom homepage on a 2026 iPhone, the experience is flawless. High-resolution imagery snaps into view, the navigation is instantaneous, and the checkout flow is seamless.

To a human being, the site is fast.NoIf you open the Nordstrom homepage on a 2026 iPhone, the experience is flawless. High-resolution imagery snaps into view, the navigation is instantaneous, and the checkout flow is seamless. To a human being, the site is fast. ( See our screenrecording below)

To Google Lighthouse, that same site is a failure.

It is common to see world-class, multi-billion dollar e-commerce sites receive a "Red" score. This is the Lighthouse Score Paradox: a massive, growing disconnect between a synthetic laboratory score and the actual reality of your user’s experience.

The Conflict: Data vs. Performance Theater

For years, marketers have been sold the lie that the Lighthouse score is a definitive grade for their website's health. This has created a toxic environment where marketing and development teams are at war:

- The Marketing Requirement: You need VWO for A/B testing, GA4 for tracking, and Hotjar for user behavior. These tools are the "brain" of your business.

- The Technical Penalty: Lighthouse treats these essential scripts as "bloat." Every tool you add to grow the business is a direct hit to your score.

This isn't a performance issue; it’s an audit issue. Marketers are being forced to choose between rich features that drive revenue and green circles that offer nothing but bragging rights

The Goal: Stop Chasing Ghosts

Chasing a 100/100 score in Lighthouse is often an exercise in Performance Theater. It involves developers using technical "tricks" to fool a bot, rather than actually improving the experience for your customers.

The objective of this article is to stop the score-obsession. We are going to look at why a "failing" grade doesn't mean your SEO is dying, why the Lighthouse baseline is a decade out of date, and how to focus on the Field Data that actually rings the cash register and moves your rankings

The Tech Debt: Benchmarking 2026 on 2016 Hardware

The fundamental problem with Lighthouse isn't the software, it’s the math. When you run a Lighthouse audit, you aren't testing your website against the modern world. You are testing it against a decade-old relic.

The 10-Year Time Warp

Lighthouse relies on "Synthetic Simulation." It doesn't measure what a real user sees; it applies a set of invisible, rigid constraints to estimate a "worst-case scenario." In 2026, those constraints have become absurd:

- Obsolete Hardware: By default, Lighthouse emulates a Motorola Moto G4, a mid-range device released in 2016. While your customers are using 4nm processors and high-speed RAM, Lighthouse is throttling your site’s CPU to mimic a phone that can’t even run modern apps efficiently.

- The 1.6Mbps Bottleneck: Lighthouse throttles your network to a "Slow 4G" connection of 1.6Mbps. Even in developing markets, average mobile speeds have long since surpassed 8Mbps. If your audience is in a metropolitan area, they are likely browsing at speeds 100x faster than what Lighthouse is simulating.

Inclusivity vs. Reality

Google justifies these settings as a way to ensure "inclusivity" for users on the slowest devices and weakest networks. That is a noble goal for a public utility, but it is a disastrous benchmark for a business.

If your target market is luxury fashion, B2B software, or high-end retail, your actual customers aren't using a 2016 Moto G4. By forcing your developers to optimize for a "marginal user" who doesn't exist in your database, Lighthouse forces you to strip away the high-end features that 99% of your actual audience expects and can easily handle.

The "Chance" Factor

Because Lighthouse uses artificial throttling, the scores are notoriously volatile. As our CTO noted, you can run the exact same test five times and get five different scores.

- Test 1: 77 (The simulation loaded the fonts first).

- Test 2: 52 (The simulation decided a script took priority).

Nothing changed on your server. Nothing changed in your code. The only thing that changed was the luck of the simulation.

Lighthouse is a weather forecast for a storm that happened ten years ago. It’s a useful "smoke test" for developers to find broken code, but it is a deeply flawed way to measure the success of a 2026 marketing strategy.

The "Third-Party" Tax: The Innovation Penalty

There is a massive contradiction at the heart of modern marketing. You are told to be data-driven, to A/B test every headline, and to track every millisecond of the user journey. But the moment you add the tools to do that, Lighthouse penalizes you.

This is the Third-Party Tax. It is the "score-dropping" price you pay for running a sophisticated business.

The Conflict: Growth vs. The Audit

A modern marketing stack isn't "bloat"—it’s the engine of your growth. To run a high-performing site in 2026, you likely rely on:

- CRO Tools (VWO/Optimizely): To ensure your layout actually converts.

- Advanced Analytics (GA4/Mixpanel/New Relic): To understand user behavior and system health.

- Social & Ad Pixels (TikTok/Meta/Google Ads): To power your attribution and retargeting.

- Customer Experience Tools (Hotjar/Intercom): To support and listen to your users.

Lighthouse sees these as 15+ "obstacles" to a fast load. It scans the page, sees the JavaScript execution time of these tools, and slashes your performance score.

The Developer's Dilemma

Because the Lighthouse score is so visible to leadership, developers are often forced to use "tricks" to win back those lost points. They might defer your A/B testing script by three seconds or load your analytics only after the user scrolls.

The result? Your data breaks. If a user bounces in two seconds, your "deferred" analytics never saw them. If your A/B test script is delayed, the user sees a "flash of original content" before the test swaps in, ruining the experiment. You are effectively breaking your business tools just to make a synthetic audit happy.

The Myth of the "Clean" Page

It’s easy to get a 100/100 Lighthouse score on a blank page with no tracking, no pixels, and no interactivity. But a "clean" page doesn't sell products.

As our CTO highlighted, if you load a site and it feels fast, but Lighthouse is screaming about "Total Blocking Time," it’s often just the analytics tools doing their job. If you have 15 analytics tools and the site still feels responsive to a real human, that is a win. You have successfully balanced data collection with user experience.

The Bottom Line: Don’t let a synthetic score dictate your tech stack. If a tool like VWO or New Relic provides more value in data than it costs in real-world latency, it belongs on your site. Period. Lighthouse doesn't pay your bills; your data-driven decisions do.

Lab Data vs. Field Data: The SEO Reality Check

If Lighthouse isn’t a direct ranking factor, why does everyone act like a 50/100 score is a death sentence for SEO?

The confusion stems from a failure to distinguish between Lab Data and Field Data. To win at SEO in 2026, you need to ignore the "Lab" and master the "Field."

The Lab: A Controlled Fantasy

Lighthouse is Lab Data. It is a synthetic, one-off test run in a vacuum. It’s useful for catching a massive code error before you push it live, but Google does not use this "grade" to determine where you sit in the search results. Why? Because a bot’s opinion of your site doesn’t matter as much as a human’s experience.

The Field: Where the SEO Battle is Won

Google’s actual ranking signals come from the Chrome User Experience Report (CrUX), also known as Field Data. This is real-world telemetry gathered from millions of actual Chrome users visiting your site on their actual devices.

When Google evaluates your "Page Experience," they aren't looking at your Lighthouse score; they are looking at your Core Web Vitals (CWV) in the field:

- LCP (Largest Contentful Paint): How fast did the main content actually appear for your users?

- CLS (Cumulative Layout Shift): Did the page jump around while they were trying to click a button?

- INP (Interaction to Next Paint): How responsive was the site when they actually tapped the screen?

The Reality Check: Google Search Console vs. Google Lighthouse

This is where it gets interesting: A site can have a "Red" Lighthouse score of 40 and still be "Green" in Google Search Console.

We’ve seen this repeatedly in our own tests. A site loads in under a second for 90% of real-world users (Field Data), but because it carries the "Third-Party Tax" of analytics and A/B testing, Lighthouse (Lab Data) gives it a failing grade.

Google's algorithm prioritizes the 90% of real users over the 1 Lighthouse bot.

To stop the internal debates between marketing and dev teams, you have to understand that these two tools are not measuring the same thing. They are looking at two different worlds.

Google Search Console (The Truth)

What it shows: How real users experienced your site over time: “Did most real people using Chrome have a good experience?”

- Source: Based on Field Data (Chrome User Experience Report / CrUX).

- Volume: Aggregated across thousands or millions of actual visits.

- Methodology: Uses percentiles (usually the 75th percentile) to show what the vast majority of your audience sees.

- Timing: Updated on a delay (days to weeks) to ensure statistical accuracy.

- SEO Weight: This is the data Google actually trusts for search quality signals and ranking thresholds.

Google Lighthouse (The Diagnostic)

What it shows: How your site performs in a single synthetic test under controlled conditions: “How would this page behave on a hypothetical slow phone, right now, under artificial constraints?”

- Source:Lab Data only.

- Volume: One page, one run, one device profile.

- Methodology: Uses simulated throttling (slow device + slow network) with a cold cache and no user behavior.

- Timing: A "right now" snapshot that is highly sensitive to timing, resource order, and randomness.

- SEO Weight: It is a diagnostic tool for developers, not a measurement of lived reality or a direct ranking signal.

The Marketer’s Strategy

If you want to improve your SEO, stop staring at the Lighthouse panel in your browser. Instead:

- Open Google Search Console: Look at the "Core Web Vitals" report. This is the only performance data Google actually uses for ranking.

- Look for Trends, Not Snapshots: Field data is averaged over a 28-day rolling window. It filters out the "luck" of a single synthetic test and shows you the truth of your user experience.

- Pass the Threshold: Google doesn't reward you more for having a "perfect" score. They just want you to be in the "Good" (Green) threshold. Once you're there, further optimization offers diminishing returns for SEO.

If your real users are happy and your Search Console is Green, your Lighthouse score is irrelevant. Focus your dev resources on maintaining that real-world experience, not on chasing a 100/100 lab score that Google ignores.

The New Frontier: Performance in the Age of AI Search

As we move into 2026, a new player has entered the performance debate: The AI Crawler. Platforms like Perplexity, SearchGPT, and Gemini are no longer just "indexing" your keywords; they are "ingesting" your brand to provide instant answers.

If you thought humans were impatient, meet the AI agents. For these systems, website performance isn't just about "speed", it's about eligibility.

AI Crawlers Don't "Wait" for JavaScript

Many AI crawlers and Large Language Models (LLMs) operate on a "Text-First" basis. If your website relies on heavy, client-side JavaScript to render its content (a common side effect of "gaming" Lighthouse scores by deferring everything), an AI crawler might see a blank page.

If the AI bot can’t see your content in the initial HTML "handshake," your brand won't be cited in the answer. You aren't just losing a Lighthouse point; you’re losing a seat at the table in the AI-driven search results.

Performance as a Trust Signal for AI

Recent data shows a clear trend: AI search engines prioritize authoritative and stable sources.

- The INP Factor: If your site has a high "Interaction to Next Paint" (INP) lag, it signals to AI algorithms that your technical infrastructure is unreliable.

- The SEO Overlap: AI systems often use Google’s "Field Data" (CrUX) as a proxy for quality. If real users are bouncing because of a clunky, unstable experience, AI models are less likely to "trust" and cite your content as a primary source.

The "Agentic Commerce" Reality

We are moving toward Agentic Commerce, where AI agents will soon perform tasks for users—like booking a flight or buying a product—directly. These agents require "clean" technical health. A site that is bogged down by 20+ legacy tracking pixels and unoptimized code isn't just slow for a human; it’s a "broken" environment for an AI agent to navigate.

You shouldn't optimize for Lighthouse, but you must optimize for crawlability. Ensuring your core content is visible in the initial HTML and maintaining a "Green" status in your Core Web Vitals is no longer just an SEO tactic, it’s your "Entry Ticket" into the AI search ecosystem.

Strategic Recommendations: The Marketer’s Action Plan for 2026

The goal of web performance in 2026 isn't to win a "Best in Code" award from a bot. It is to ensure that your actual customers—the ones paying your bills, have a fast, seamless, and high-converting experience.

If you want to move away from the "Performance Theater" and toward actual growth, here is your strategic roadmap:

1. Audit Your "Tag Bloat" (With a Business Mindset)

Don’t let your developers just delete scripts to save points. Instead, conduct a "Value vs. Weight" audit:

- The Question: "Does this tool provide more revenue/insight than the 200ms of lag it costs?"

- The Action: If you have three tools doing the same thing (e.g., GA4, Mixpanel, and a Facebook Pixel all tracking the same button click), consolidate them. Every millisecond of "Total Blocking Time" should be paying for itself in data.

2. Prioritize Field Data in Your Reports

Stop putting a single Lighthouse score on your executive dashboards. It’s too volatile and misleading.

- The New KPI: Use the Core Web Vitals report from Google Search Console.

- The Goal: Aim for "Green" status (the 75th percentile of real users). Once you are in the "Good" zone, stop optimizing for speed and start optimizing for conversion.

3. Move Beyond the "Moto G4" Baseline

Ask your technical team to calibrate their testing to your actual audience.

- The Reality: If your CRM data shows that 80% of your users are on high-end iPhones and 5G, testing against a 2016 budget phone is a waste of resources.

- The Action: Use tools that allow for "Custom Throttling." Test against the devices your customers actually use, not a global average that includes devices from a decade ago.

4. Implement "Islands of Interactivity"

Instead of "deferring" scripts (which breaks your data), work with your team to implement modern architectures like Islands Architecture (Astro) or Resumability (Qwik).

- The Benefit: These frameworks allow the page to be "instantly" interactive for the user without the massive JavaScript execution delay that Lighthouse hates. It’s the ultimate way to have your rich features and your performance, too.

5. Focus on the "Perceived" Speed

Performance is a feeling, not just a number.

- Action: Prioritize Visual Stability (CLS) and Responsiveness (INP). If a user clicks a button and the site reacts instantly, they won't care if a tracking pixel took an extra 500ms to fire in the background.

Lighthouse is a diagnostic tool for a laboratory; your website is a living business in the real world. In 2026, the brands that win will be the ones that stop chasing "Green Circles" and start obsessing over the real people on the other side of the screen.

Don't play the game. Build the experience.